Executive brief

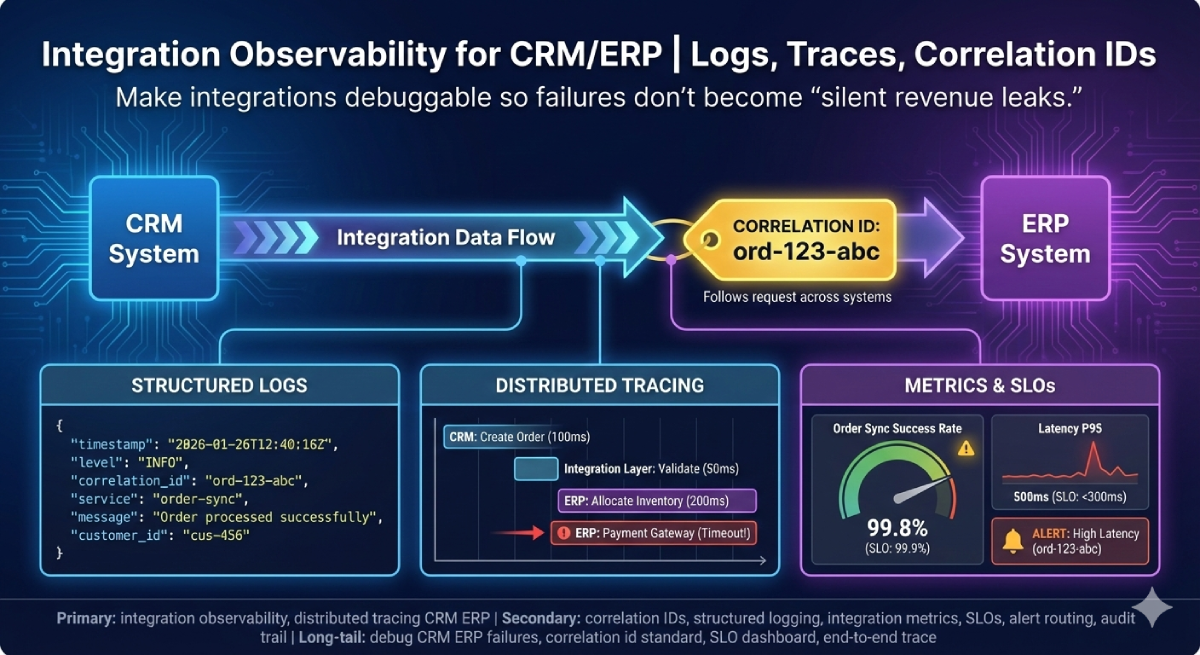

Most CRM↔ERP integrations don’t “go down”—they fail silently.

One missing webhook, one stuck queue, one mis-mapped status code can become a silent revenue leak:

wrong prices, stale inventory, missing order updates, delayed invoices.

Integration observability turns that risk into a managed operating model using correlation IDs,

structured logs, distributed tracing, metrics, alerting, and SLOs.

Implementation standards for signing, idempotency, and contract discipline:

/api-integrations.

If you want a system-level review of your integration layer and production readiness:

/architecture-review.