Need help implementing these insights?

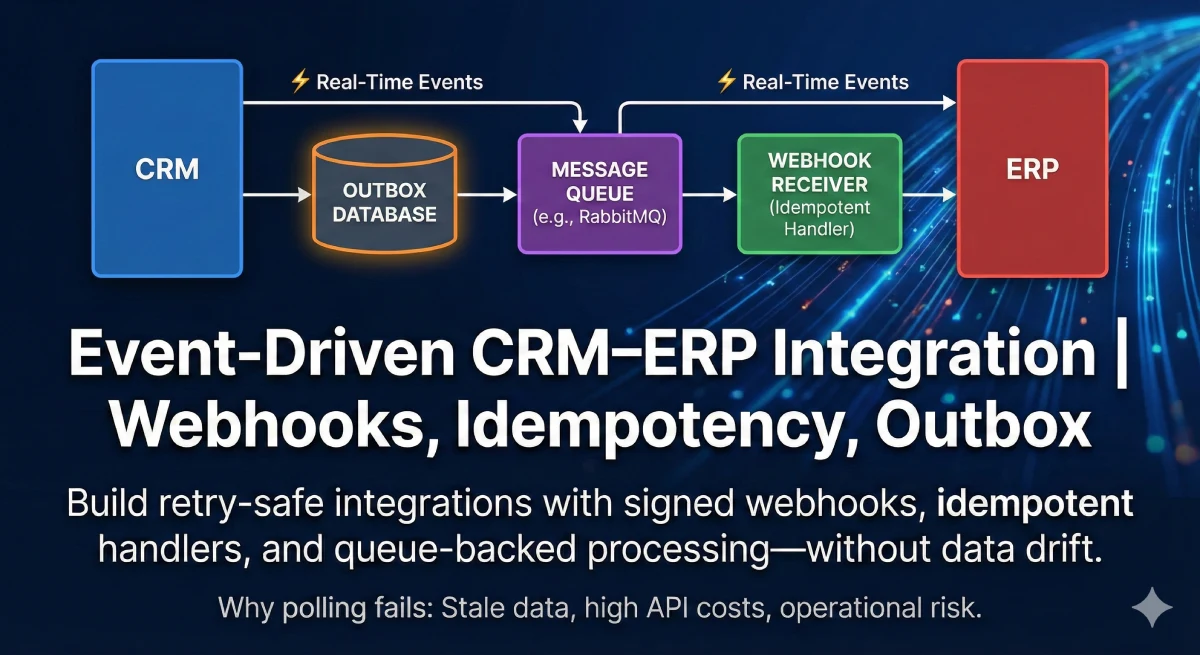

If you want an event driven CRM ERP integration that survives retries, peak load, and third-party instability,

start with a practical roadmap: contracts, idempotency, outbox, and production monitoring.

Typical response within 24 hours · Clear scope & timeline · Documentation included

Related Articles

Continue reading with these related articles on CRM, ERP, and API integrations.

Further reading (standards & patterns)

Note: external references are optional. If you prefer a strictly internal-link policy, remove this block.